Misterdeepfake (sometimes stylized “MrDeepFake”) was one of the most notorious websites in the realm of nonconsensual deepfake pornography. It functioned as a platform where users could upload, download, view, and request deepfake videos that swapped people’s faces—often celebrities or private individuals—onto explicit content without their consent.

Launched in 2018, the domain became a central repository and marketplace for deepfake sexual content. According to reports, at its peak the site hosted tens of thousands of videos (some sources say ~70,000) and was visited millions of times per month. The site also operated discussion forums where users exchanged technical advice for creating deepfakes, negotiated custom requests, and shared community practices.

MrDeepFakes billed itself as a user-friendly hub with broad reach. But underneath that façade lay serious ethical and legal issues: many of the videos were made without any consent from those whose faces were used.

How MrDeepFakes Operated: Marketplace Dynamics & Technical Aspects

To understand MrDeepFakes’ operations, researchers have studied its market structure, user behavior, and technical facilitation.

Marketplace Structure & Monetization

- The site functioned not only as a gallery but as a marketplace: users could pay to commission custom deepfakes or to get priority access.

- Some creators were “verified creators” who could monetize their content via paid services.

- Transactions were often conducted anonymously, with cryptocurrency or indirect payment channels, making tracing more difficult.

- The site’s forums and comment areas allowed the community to share techniques, tools, tips, and datasets useful in deepfake creation.

Technical & AI Techniques

- The deepfakes typically used face mapping and generative AI (GANs, autoencoders) to blend target faces into existing explicit video footage, preserving lighting, expression, and motion to maximize realism.

- To build convincing deepfakes, large datasets of images or video frames of the target are collected to train models.

- Post-processing and manual editing were typical: creators would refine edges, correct color mismatches, smooth transitions, etc.

- The site’s forums included discussions about which software tools worked best (e.g. DeepFaceLab, FaceSwap, etc.).

Because of this ecosystem, MrDeepFakes became both a content repository and a hub for illicit deepfake expertise.

Ethical, Legal & Social Concerns

MrDeepFakes embodied many of the worst risks associated with deepfake technology. Below are primary concerns it raised:

Non-consensual Use & Privacy Violations

One of the foundational problems is that many of the deepfake videos used someone’s likeness without consent, violating privacy, dignity, and personal rights. Many victims were civilians or celebrities against whose will their faces were used

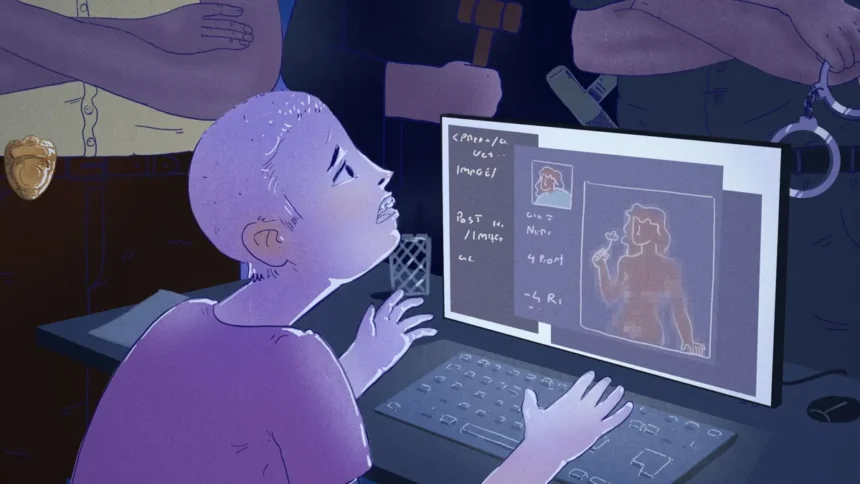

Harassment, Intimidation & Abuse

Deepfake pornography can be weaponized for harassment or blackmail. Individuals may be forced or coerced under threat of exposure. The emotional harm is severe, and for many victims, removing content is nearly impossible once it spreads.

Legal Gaps & Enforcement Challenges

- In many jurisdictions, non-consensual deepfake pornography is not yet specifically regulated. Enforcement often relies on existing laws (defamation, copyright, privacy).

- The U.S. “Take It Down Act” (recently passed by Congress) targets nonconsensual sexual imagery, including deepfakes, requiring platforms to remove such content within 48 hours of a request.

- The shutdown of MrDeepFakes came shortly after that legislation passed, though it’s unclear whether the law was the direct cause.

- In Australia, a man faces a AUD $450,000 fine for posting deepfake images to MrDeepFakes.

Cultural & Social Impacts

- Targeting female celebrities was common; many deepfakes involved public figures like Taylor Swift, Emma Watson, Greta Thunberg, etc.

- The site normalized the idea that famous individuals’ images are public property, eroding respect for personhood and consent.

- The existence of a thriving deepfake marketplace amplifies distrust in digital media, eroding the boundary between real and fake content.

Because MrDeepFakes was so central in the nonconsensual deepfake ecosystem, its influence both amplified abuse and exposed systemic vulnerabilities in how online platforms handle synthetic content.

Shutdown & Exposure: What Led to the End of MrDeepFakes

In 2025, MrDeepFakes announced it was shutting down permanently, citing loss of service provider and data loss. A prominent public landing page now displays a “Shutdown Notice”, stating it will not relaunch.

Investigative Exposure

- Investigations by Bellingcat, CBC, Tjekdet, Politiken, and other media groups traced connections to individuals behind the site. One reported key figure is a Canadian pharmacist allegedly tied to its operation.

- The exposure helped identify how the platform avoided detection, how community trust was built, and how proxies & services were used to sustain operations.

Contributing Factors

- Loss of Service Provider: The site claimed one of its critical infrastructure providers terminated its service permanently.

- Data Loss: The notice also showed the site had lost data in a way that made continued operation unviable.

- Legal & Regulatory Pressure: The timing closely followed new U.S. legislation targeting nonconsensual imagery.

- Public & Media Exposure: The investigative exposure likely increased risk for infrastructure providers and domain registrars to drop associations.

Though the site is offline, many have warned that its content may survive or resurface on less regulated or underground platforms (e.g. Telegram, private forums).

Detection, Countermeasures & Prevention

The MrDeepFakes saga has sharpened focus on how to detect, counter, and regulate deepfake abuse. Below are key methods and challenges.

Technical Detection Methods

- Researchers use artifact detection (inconsistencies in facial warping, lighting, blinking, edges) to flag synthetic media.

- New methods like masking parts & recovery (Mover models) try to identify facial part inconsistencies that deepfakes struggle to reconstruct perfectly.

- Adversarially robust models combine multiple CNNs or prediction fusion to resist tampering.

- Datasets such as WildDeepfake provide real-world cases for testing detection algorithms.

Policy, Legal & Platform Measures

- The Take It Down Act in the U.S. mandates prompt removal of nonconsensual sexual imagery.

- Many social and content platforms now ban deepfake pornography and honor takedown requests.

- Domain registrars, hosting providers, and infrastructure services may refuse to support sites implicated in nonconsensual deepfake content. The loss of service contributed to MrDeepFakes’ shutdown.

- Public awareness campaigns, legal reforms, and victim support frameworks are becoming more important in combating abuse.

Challenges & Limitations

- Deepfake detection is an arms race — methods continually evolve, and perpetrators adapt.

- Some detection tools struggle with high-quality, subtle deepfakes where artifacts are minimized.

- Legal jurisdiction fragmentation makes it hard to enforce takedowns globally.

- Once content is disseminated, it may persist beyond original hosting platforms.

The case of MrDeepFakes underscores that technical solutions alone are not enough — they must be combined with regulation, public pressure, infrastructure accountability, and victim rights.

Lessons Learned & Future Outlook

Though MrDeepFakes is offline, its legacy offers important lessons and directions for the future.

Lessons

- Transparency & Accountability Matter

The opacity of the site’s ownership and infrastructure enabled it to operate relatively unchallenged for years. Public investigations eventually increased pressure. - Infrastructure Gatekeeping is Critical

The withdrawal of support by a “critical service provider” was cited as a key cause of shutdown. Infrastructure providers have a role in enforcing digital norms. - Law & Policy Can Shift Incentives

The emergence of targeted legislation (e.g. in the U.S.) places pressure on platforms to remove content. These laws may deter abuse over time. - Detection Tools Must Evolve

As synthetic media becomes more sophisticated, detection systems must stay adaptive, combining multiple techniques and leveraging large datasets. - Survivor Support & Remediation Are Essential

Sites like MrDeepFakes leave behind victims whose images were misused. Mechanisms for takedown, compensation, counseling, and legal redress must be prioritized.

Future Outlook

- Deepfake abuse may migrate to more obscure or encrypted platforms (e.g. Telegram, private forums). The closure of mainstream hubs may scatter, not eliminate, abuse.

- Detection technology advances — particularly in real-time detection, watermarking, forensic traceability — will grow in importance.

- More countries may enact specific nonconsensual deepfake laws, closing legal gaps.

- Digital platforms and infrastructure providers may be held more accountable via regulation or reputational risk to refuse service to illegal content sites.

- Public awareness of deepfake risk and media literacy will need to expand so users are more skeptical of manipulated media.

Conclusion

MrDeepFakes represented both a dark frontier and a turning point in how society confronts the misuse of synthetic media. As a sprawling marketplace for nonconsensual deepfake pornography, it amplified harms of privacy violation, harassment, and technological abuse. Its eventual shutdown — driven by exposure, infrastructure withdrawal, and legislative momentum — shows that carefully coordinated pressure, regulation, and technical vigilance can force even major bad actors offline.

Yet the deeper lessons of MrDeepFakes remain: the need for robust detection, victim protection, ethical infrastructure governance, and continued evolution of law and platform norms. As deepfake tools become more capable and accessible, the battle over who controls their use—and how to defend consent and truth in digital media—will only intensify.